Network architecture

encoder–decoder architecture

- 20 conv

- 4 max pooling

- 4 upsampling

- 2 dropout

- 4 skip connections.

이미지 한 변의 길이가 1km라고 생각하면 됨. 픽셀의 각 값은 강수량을 로그 변환한 값임

Each grid contains 928 cells×928 cells with an edge length of 1 km; for each cell, the input value is the logarithmic precipitation depth as retrieved from the radar-based precipitation product.

RainNet은 CNN 구조로만 이루어짐. 왜냐? RNN 구조보다 학습에 더 안정적이고, 더 정확하게 예측한다.

RainNet differs fundamentally from ConvLSTM

in several application domains, convolutional neural networks have turned out to be numerically more stable during training and make more accurate predictions than these recurrent neural networks. Therefore, RainNet uses a fully convolutional architecture and does not use LSTM layers to propagate information through time.

그 다음 시간인 t+10 min 어떻게 예측?

apply RainNet recursively.

- t-15, t-10, t-5, t 로 t+5 예측

- -> t-10, t-5, t, t+5로 t+10 예측

Optimization procedure

logcosh 사용하는 이유?

using the logcosh loss function is beneficial for the optimization of variational autoencoders (VAEs) in comparison to mean squared error.

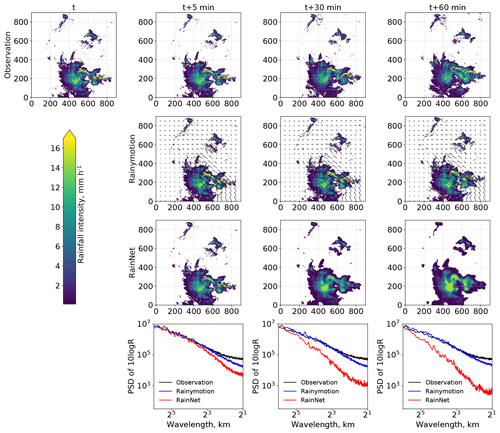

Hits, false alarms, and misses are defined by the contingency table and the corresponding threshold value. Quantities Pn and Po represent the nowcast and observed fractions, respectively, of rainfall intensities exceeding a specific threshold for a defined neighborhood size.

We have applied threshold rain rates of 0.125, 1, 5, 10, and 15 mm h−1 for calculating the CSI and the FSS. For calculating the FSS, we use neighborhood (window) sizes of 1, 5, 10, and 20 km.

MAE?

The MAE captures errors in rainfall rate prediction (the fewer the better)

CSI?

and CSI (the higher the better) captures model accuracy – the fraction of the forecast event that was correctly predicted – but does not distinguish between the sources of errors.

FSS ?

The FSS determines how the nowcast skill depends on both the threshold of rainfall exceedance and the spatial scale

gmd.copernicus.org/articles/13/2631/2020/

RainNet v1.0: a convolutional neural network for radar-based precipitation nowcasting

Abstract. In this study, we present RainNet, a deep convolutional neural network for radar-based precipitation nowcasting. Its design was inspired by the U-Net and SegNet families of deep learning models, which were originally designed

gmd.copernicus.org

RainNet v1.0 설명 (Spatiotemporal prediction)

Network architecture

Its architecture was inspired by the U-Net and SegNet families of deep learning models for binary segmentation (Badrinarayanan et al., 2017; Ronneberger et al., 2015; Iglovikov and Shvets, 2018) .

encoder–decoder architecture

- 20 conv

- 4 max pooling

- 4 upsampling

- 2 dropout

- 4 skip connections.

이미지 한 변의 길이가 1km라고 생각하면 됨. 픽셀의 각 값은 강수량을 로그 변환한 값임

- Each grid contains 928 cells×928 cells with an edge length of 1 km; for each cell, the input value is the logarithmic precipitation depth as retrieved from the radar-based precipitation product.

RainNet은 CNN 구조로만 이루어짐. 왜냐? RNN 구조보다 학습에 더 안정적이고, 더 정확하게 예측한다.

- RainNet differs fundamentally from ConvLSTM

- in several application domains, convolutional neural networks have turned out to be numerically more stable during training and make more accurate predictions than these recurrent neural networks. Therefore, RainNet uses a fully convolutional architecture and does not use LSTM layers to propagate information through time.

그 다음 시간인 t+10 min 어떻게 예측?

- apply RainNet recursively.

- t-15, t-10, t-5, t 로 t+5 예측

- -> t-10, t-5, t, t+5로 t+10 예측

Optimization procedure

logcosh 사용하는 이유?

- using the logcosh loss function is beneficial for the optimization of variational autoencoders (VAEs) in comparison to mean squared error.

Mean Absolute Error, Critical Success Index

Hits, false alarms, and misses are defined by the contingency table and the corresponding threshold value. Quantities Pn and Po represent the nowcast and observed fractions, respectively, of rainfall intensities exceeding a specific threshold for a defined neighborhood size.

We have applied threshold rain rates of 0.125, 1, 5, 10, and 15 mm h−1 for calculating the CSI and the FSS. For calculating the FSS, we use neighborhood (window) sizes of 1, 5, 10, and 20 km.

MAE?

The MAE captures errors in rainfall rate prediction (the fewer the better)

CSI?

and CSI (the higher the better) captures model accuracy – the fraction of the forecast event that was correctly predicted – but does not distinguish between the sources of errors.

FSS ?

The FSS determines how the nowcast skill depends on both the threshold of rainfall exceedance and the spatial scale

gmd.copernicus.org/articles/13/2631/2020/

RainNet v1.0: a convolutional neural network for radar-based precipitation nowcasting

Abstract. In this study, we present RainNet, a deep convolutional neural network for radar-based precipitation nowcasting. Its design was inspired by the U-Net and SegNet families of deep learning models, which were originally designed

code

from keras.models import *

from keras.layers import *

def rainnet(input_shape=(928, 928, 4), mode="regression"):

"""

The function for building the RainNet (v1.0) model from scratch

using Keras functional API.

Parameters:

input size: tuple(W x H x C), where W (width) and H (height)

describe spatial dimensions of input data (e.g., 928x928 for RY data);

and C (channels) describes temporal (depth) dimension of

input data (e.g., 4 means accounting four latest radar scans at time

t-15, t-10, t-5 minutes, and t)

mode: "regression" (default) or "segmentation".

For "regression" mode the last activation function is linear,

while for "segmentation" it is sigmoid.

To train RainNet to predict continuous precipitation intensities use

"regression" mode.

RainNet could be trained to predict the exceedance of specific intensity

thresholds. For that purpose, use "segmentation" mode.

"""

inputs = Input(input_shape)

conv1f = Conv2D(64, 3, padding='same', kernel_initializer='he_normal')(inputs)

conv1f = Activation("relu")(conv1f)

conv1s = Conv2D(64, 3, padding='same', kernel_initializer='he_normal')(conv1f)

conv1s = Activation("relu")(conv1s)

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1s)

conv2f = Conv2D(128, 3, padding='same', kernel_initializer='he_normal')(pool1)

conv2f = Activation("relu")(conv2f)

conv2s = Conv2D(128, 3, padding='same', kernel_initializer='he_normal')(conv2f)

conv2s = Activation("relu")(conv2s)

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2s)

conv3f = Conv2D(256, 3, padding='same', kernel_initializer='he_normal')(pool2)

conv3f = Activation("relu")(conv3f)

conv3s = Conv2D(256, 3, padding='same', kernel_initializer='he_normal')(conv3f)

conv3s = Activation("relu")(conv3s)

pool3 = MaxPooling2D(pool_size=(2, 2))(conv3s)

conv4f = Conv2D(512, 3, padding='same', kernel_initializer='he_normal')(pool3)

conv4f = Activation("relu")(conv4f)

conv4s = Conv2D(512, 3, padding='same', kernel_initializer='he_normal')(conv4f)

conv4s = Activation("relu")(conv4s)

drop4 = Dropout(0.5)(conv4s)

pool4 = MaxPooling2D(pool_size=(2, 2))(drop4)

conv5f = Conv2D(1024, 3, padding='same', kernel_initializer='he_normal')(pool4)

conv5f = Activation("relu")(conv5f)

conv5s = Conv2D(1024, 3, padding='same', kernel_initializer='he_normal')(conv5f)

conv5s = Activation("relu")(conv5s)

drop5 = Dropout(0.5)(conv5s)

up6 = concatenate([UpSampling2D(size=(2, 2))(drop5), conv4s], axis=3)

conv6 = Conv2D(512, 3, padding='same', kernel_initializer='he_normal')(up6)

conv6 = Activation("relu")(conv6)

conv6 = Conv2D(512, 3, padding='same', kernel_initializer='he_normal')(conv6)

conv6 = Activation("relu")(conv6)

up7 = concatenate([UpSampling2D(size=(2, 2))(conv6), conv3s], axis=3)

conv7 = Conv2D(256, 3, padding='same', kernel_initializer='he_normal')(up7)

conv7 = Activation("relu")(conv7)

conv7 = Conv2D(256, 3, padding='same', kernel_initializer='he_normal')(conv7)

conv7 = Activation("relu")(conv7)

up8 = concatenate([UpSampling2D(size=(2, 2))(conv7), conv2s], axis=3)

conv8 = Conv2D(128, 3, padding='same', kernel_initializer='he_normal')(up8)

conv8 = Activation("relu")(conv8)

conv8 = Conv2D(128, 3, padding='same', kernel_initializer='he_normal')(conv8)

conv8 = Activation("relu")(conv8)

up9 = concatenate([UpSampling2D(size=(2, 2))(conv8), conv1s], axis=3)

conv9 = Conv2D(64, 3, padding='same', kernel_initializer='he_normal')(up9)

conv9 = Activation("relu")(conv9)

conv9 = Conv2D(64, 3, padding='same', kernel_initializer='he_normal')(conv9)

conv9 = Activation("relu")(conv9)

conv9 = Conv2D(2, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9)

if mode == "regression":

outputs = Conv2D(1, 1, activation='linear')(conv9)

elif mode == "segmentation":

outputs = Conv2D(1, 1, activation='sigmoid')(conv9)

model = Model(inputs=inputs, outputs=outputs)

return model

'Data-science > deep learning' 카테고리의 다른 글

| conda 가상환경 구축 (0) | 2020.10.28 |

|---|---|

| trajactory GRU 코드 (0) | 2020.10.23 |

| stylegan2 & stylegan2-ada 코드 (loss & network) (0) | 2020.10.17 |

| [StyleGan2-ada 실습] AFHQ 데이터 셋 이용해서 stylegan2-ada 학습하기 2 (0) | 2020.10.16 |

| [StarGan v2] AFHQ 데이터 셋 및 pretrained network 성능 (0) | 2020.10.15 |